Fast response times and substantive search in messages

Elastic is known for its monitoring and database that easily stores documents and delivers very fast results in searches.

Many companies have an integration platform, a so-called Enterprise Service Bus (ESB). For many people, it is unclear and not visible what happens on such an ESB. Often there a built-in log-in options to store the messages that go back and forth in a database. Most of the time a web application has been built on such a database, so both technical and business-related people can look at details of messages. In general, it is a traditional SQL database that also has its limits. You have to record in advance what you want to search for and searching entire messages is difficult. Elastic makes fast response times and substantive searching in messages possible by means of a NoSQL database.

Connecting Elastic Logstash to JMS

Monitoring messages and sending them to Elastic must be non-intrusive to your current business process. The monitoring is often separated from your business process on the ESB, so in case of errors in the monitoring, this has no effect on the message traffic. You often do this by using a queue mechanism. This offers Elastic Logstash a great opportunity to make connections with the JMS providers of your integration layer via the JMS input plugin. A number of JMS providers are supported by default, such as RabbitMQ or ActiveMQ. This does not alter the fact that connecting to JMS providers that are not supported is also possible. In this blog-post, I will go into this in more detail by linking Logstash to Aurea’s JMS provider Sonic MQ. Any other JMS works roughly similarly.

Getting started: how do you configure Elastic Logstash to read messages via Sonic JMS?

- In my ESB I made a JNDI entry in Sonic for the JMS configuration (How to do this can be found in the Sonic MQ documentation);

- I created a YAML file with configuration settings that can be used by my Logstash pipeline;

- I have made a pipeline definition with references to a YML file with the JMS JNDI specification (file from step 2);

- I started Logstash and then made sure in Elastic that the created index can be used.

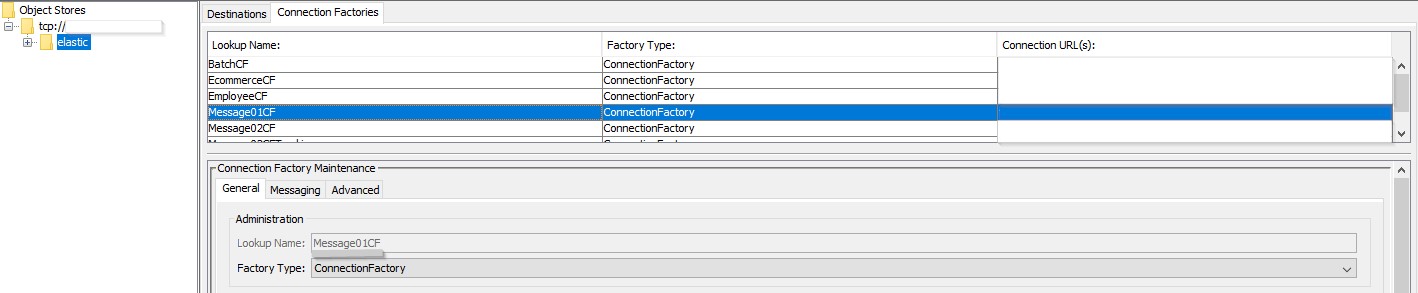

Step 1

As you can see, we created a JNDI entry in Sonic of the type “Connection Factory” with the name Message01CF. This name is the JNDI name that we will also return (use it) in our config files in the following steps.

Step 2

We create a YML file containing the JNDI connection specifications. Let’s name this YML file like this: tst_aureasonicjms_msg01.yml

In addition to returning the name of our JNDI entry, we also have to record how we can connect to Sonic. Finally, we add references where the jar files are needed to connect JMS to Sonic.

Our YML file looks like this:

sonicmq:

:jndi_name: elastic/Message01CF

:jndi_context:

java.naming.factory.initial: com.sonicsw.jndi.mfcontext.MFContextFactory

java.naming.security.principal: Administrator

java.naming.provider.url: tcp://localhost:2506

java.naming.security.credentials: PASSWORD

com.sonicsw.jndi.mfcontext.domain: Domain1

:require_jars:

– /opt/aurea/sonic2015/base/MQ10.0/lib/mfcontext.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_SF.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_ASPI.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_Channel.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/MFdirectory.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_Selector.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_Client_ext.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/mgmt_client.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_XMessage.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/mail.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_mgmt_client.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/mgmt_config.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/smc.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_Client.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_SSL.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/xercesImpl.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_Crypto.jar

– /opt/aurea/sonic2015/base/MQ10.0/lib/sonic_XA.jar

Step 3

We now have to set up a Logstash pipeline in which we ensure that we make the connection with Sonic via the input JMS plugin. At the Input, you define from which queue (destination) you want to consume messages (this can also be a topic, make sure you have defined the correct connection factory in your JNDI entry).

In our example, we connect to Sample.Q1 to read messages. In the section of yaml_file / yaml_section you determine where Logstash can find the correct configuration to set up the connection via JNDI to Sonic.

In the Filter section, I now add an extra field so that I know per message in every environment of my DTAP street where this message comes from. I also delete unnecessary fields. In this case, anything that either starts with jms / JMS or has the name transactionID.

As output you provide the information to connect to your own Elastic cluster and choose the correct index.

input {

jms {

id => “SonicJMS”

include_header => false

include_properties => true

include_body => true

use_jms_timestamp => false

interval => 10

destination => “Sample.Q1”

yaml_file => “/opt/elastic/logstash_jms_config/tst_aureasonicjms_msg01.yml”

yaml_section => “sonicmq”

}

}

filter {

mutate {

add_field => { “host” => “SonicTst” }

}

prune {

blacklist_names => [ “jms”, “transactionID”, “JMS” ]

}

}

output {

elasticsearch {

hosts => “URL-OF-YOUR-ELASTIC-CLUSTER”

index => “INDEX-NAME”

user => “USER”

password => “PASSWORD”

}

}

Two useful tips to keep in mind

- Within the JMS specifications, Sonic has its own extension, the Multipart principle. A Multipart JMS message is actually multiple JMS messages in 1, which is not covered by the standard specifications. As a result, it is not supported by the Elastic JMS implementation and as a Sonic user, you will always have to make sure that you do not deliver JMS Multipart messages to Elastic;

- All JMS properties that are known of a message are automatically converted to fields of your Index in Elasticsearch. This is very convenient and flexible.

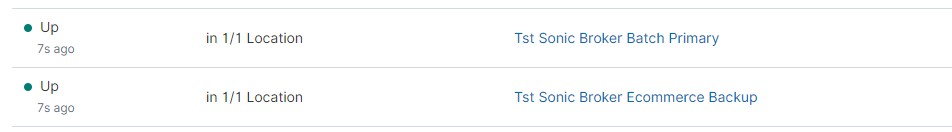

As a small bonus; consider using Elastic Heartbeat for monitoring your JMS layer. Is this JMS layer up and reachable? By defining a TCP monitoring in the heartbeat configuration, you can keep an eye on the JMS layer of your ESB.

– type: tcp

id: tcp-sonic-batch-primary

name: Tst Sonic Broker Batch Primary

schedule: ‘@every 5s’ # every 5 seconds from start of beat

hosts: [“IPHost”]

ports: [3526]

– type: tcp

id: tcp-sonic-ecom-primary

name: Tst Sonic Broker Ecommerce Primary

schedule: ‘@every 5s’ # every 5 seconds from start of beat

hosts: [“IPHost”]

ports: [3716]

Uptime in Kibina

Conclusion

Although not all JMS providers are supported with Elastic, it is very easy to connect Logstash to JMS. You now have the option of logging both your JMS messages from one Elastic environment and combining this with information from Heartbeat. This way you know whether your JMS is still active. You can also add your log files. This combination results in many insights that were not always known before.

At Devoteam, we have done this for customers with Tibco EMS and with Sonic MQ to their full satisfaction. In this way we provide the customers with a stable log environment that is ready to grow in the future, depending on how the integration landscape will change.

At Devoteam, we believe it is crucial to be in control of your IT Landscape and Business Operations. We see centralized monitoring as the #1 solution to get you in charge. We do this with Elastic, our trusted partner, and market leader in monitoring solutions. Together, we apply value-adding technology at scale, with a proven track-record at customers such as Renewi and De Watergroep. At Devoteam we shape technology for people.